Kubernetes based Collector

The JupiterOne Integration Operator is a Kubernetes-native solution for running JupiterOne integrations within your Kubernetes cluster. It manages Custom Resource Definitions (CRDs) for integration management and provides a scalable approach for organizations already using Kubernetes.

Prerequisites

Cluster Requirements

- Kubernetes 1.16+

- Helm 3+

JupiterOne Requirements

- Account ID — found at

/settings/account-management - API Token — create one at

/settings/account-api-tokensor/settings/api-tokenswith the following permissions. If using a personal token (/settings/api-tokens), your user account must have these permissions assigned.

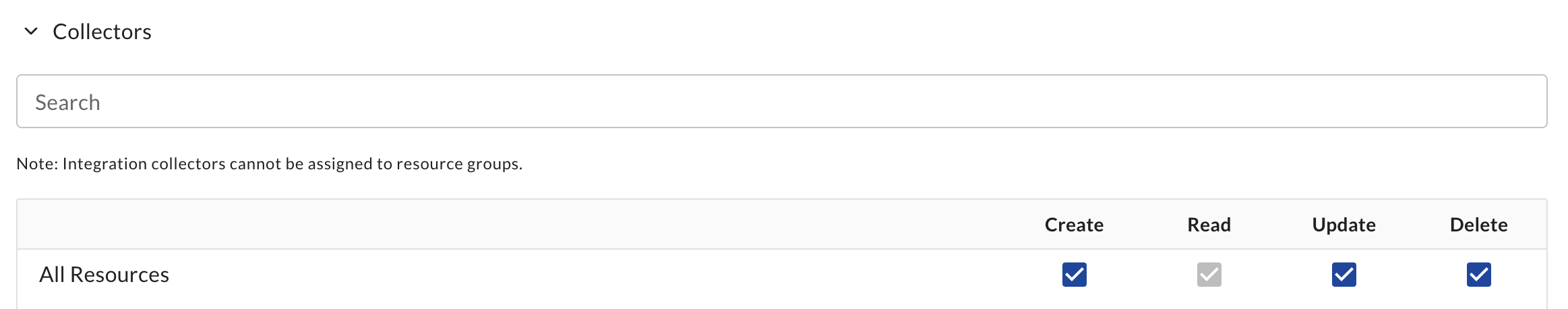

| Permission | Required | When |

|---|---|---|

| Collector Create/Read/Update/Delete | Yes | Always |

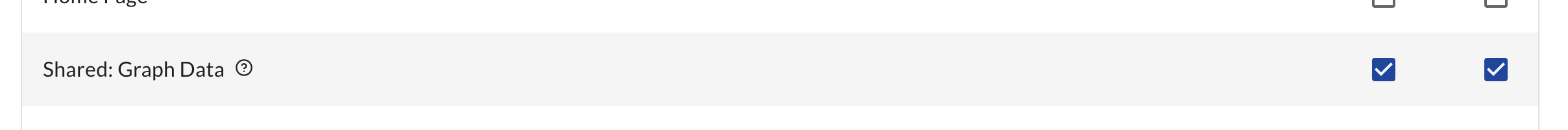

| Shared: Graph Data Read/Write | Yes | Always |

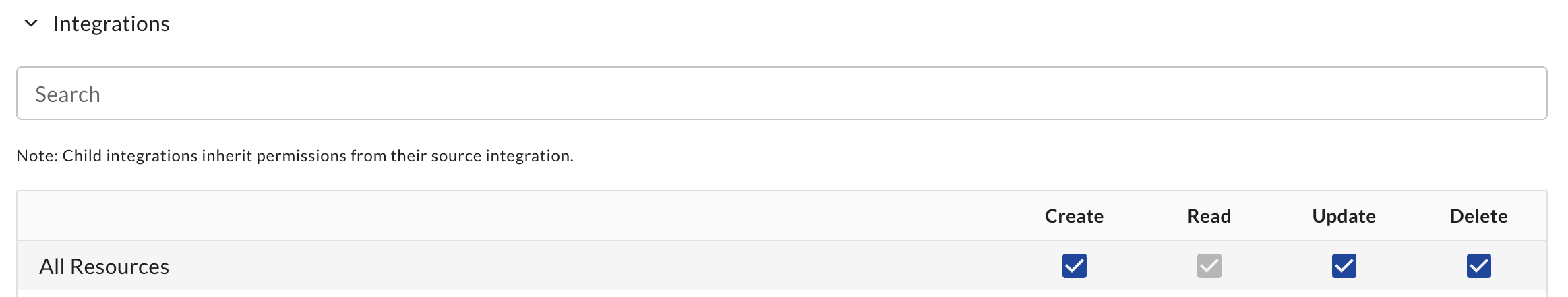

| Integration Create/Read/Update/Delete | No | Only if creating Integrations via Helm |

Permission screenshots

Installation

Installation is handled by two helm charts

- Kubernetes Operator This chart installs the controllers that manage the CRDs. This chart can be upgraded independently of the Integration Runner.

- Integration Runner This chart installs a Custom Resource

integrationrunnerthat tells the operator to create a new collector and register with JupiterOne.

Kubernetes Operator

First, we need to install the operator which manages all the CRDs.

-

Add the JupiterOne Helm Repository:

helm repo add jupiterone https://jupiterone.github.io/helm-charts

helm repo update -

Create a Namespace:

kubectl create namespace jupiterone -

Install the Integration Operator:

helm install integration-operator jupiterone/jupiterone-integration-operator --namespace jupiterone

Integration Runner

To install the Integration runner, you need to provide your API token. You can choose from two options for managing the API token secret:

The runner will create a Collector with the same name.

- Chart-Assisted (Recommended)

- External Secret (Advanced)

This is the simplest method. The Helm chart will automatically create a Kubernetes Secret in the same namespace with your API token.

Parameters:

runnerName: The name of the Runner.apiToken: Your API token. This will be created as a Secret in Kubernetes.accountID: Your JupiterOne Account ID.jupiterOneEnvironment(optional): The JupiterOne environment. This defaults tous. This can be found by inspecting the URL when accessing the UI such as<environment>.jupiterone.io.

Installation command:

helm install <runnerName> jupiterone/jupiterone-integration-runner --namespace jupiterone --set apiToken=<apiToken> --set accountID=<accountID>

This method references an existing Kubernetes Secret instead of creating one. Use this option if you manage secrets using external processes such as Sealed Secrets, External Secrets Operator, or other secret management tools.

The Secret must have a key of token in order to work properly.

Parameters:

runnerName: The name of the Runner.secretAPITokenName: The name of the existing Kubernetes Secret.accountID: Your JupiterOne Account ID.createSecret: Must be set tofalseso the Helm chart does not attempt to create the Secret.jupiterOneEnvironment(optional): The JupiterOne environment. This defaults tous. This can be found by inspecting the URL when accessing the UI such as<environment>.jupiterone.io.

Installation command:

helm install <runnerName> jupiterone/jupiterone-integration-runner --namespace jupiterone --set createSecret=false --set secretAPITokenName=<tokenName> --set accountID=<accountID>

Verification

After installation, the runner should register with JupiterOne within 30 seconds.

kubectl get integrationrunner -n jupiterone

Expected output:

NAME STATE DETAIL REGISTRATION AGE

runner running registered 2m38s

Assigning an Integration

- Web

- Helm

Assigning an integration job to a collector first requires that there are collectors registered and available. Once collectors are available, the process for defining an integration job and assigning it to a collector is straightforward.

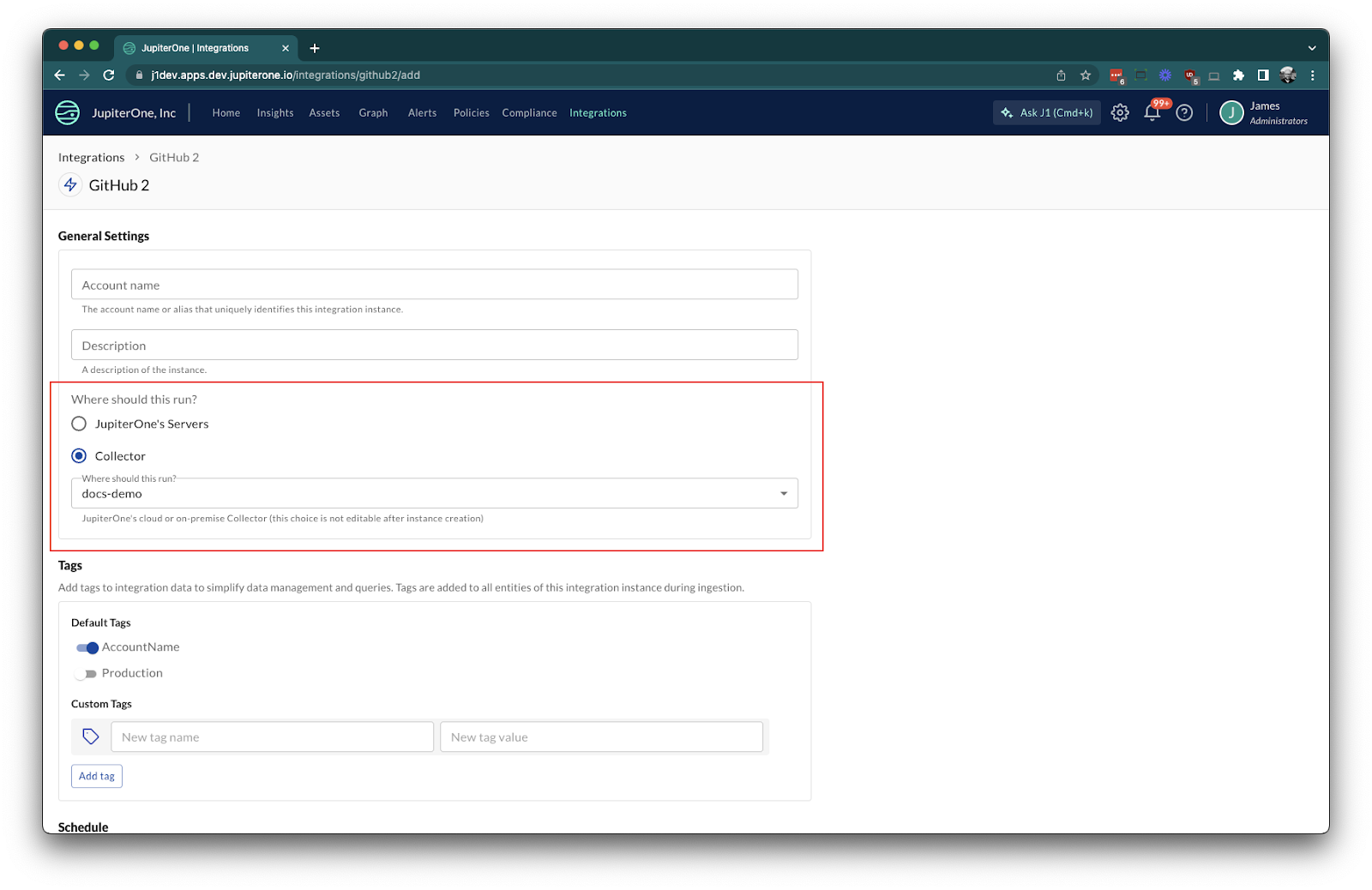

For integrations that are collector compatible, complete the integration configuration as normal. During configuration, you'll notice there's an additional option to choose where the integration should run.

Select Collector on the integration instance, and choose the corresponding collector for which you'd like the integration to run.

You may also choose to setup an integration by using a helm chart. This is supported through the Custom Resource Definition integrationinstance which is installed as part of the Kubernetes Operator.

Integrations that support this method will have documentation on how to set this up. See the Kubernetes Managed integration for an example.

Updating the Operator

To update to the latest version:

helm repo update

helm upgrade integration-operator jupiterone/jupiterone-integration-operator --namespace jupiterone

Uninstalling

To remove the operator:

helm uninstall <runnerName> --namespace jupiterone

helm uninstall integration-operator --namespace jupiterone

kubectl delete namespace jupiterone

Multiple Clusters

Multiple Kubernetes clusters are supported by installing the JupiterOne Integration Operator and Integration Runner Helm charts on each cluster. Each cluster is managed independently by the operator running within that cluster.

To set up multiple clusters:

- Repeat the installation steps for each cluster where you want to run collectors.

- Each cluster will have its own Integration Runner and Integration(s) managed separately.

You may use automation tools such as ArgoCD, Flux, or other GitOps solutions to automate and manage Helm chart deployments across your clusters. This allows you to keep your collector deployments consistent and up to date in all environments.

Each collector is registered independently with JupiterOne, and integration jobs can be assigned to collectors in any cluster as needed.

ArgoCD

You may use ArgoCD to automate Helm chart deployments in your clusters. The following are example ArgoCD Applications setting up the Integration Operator and Integration Runner.

You may find the current version of the Integration Operator and the Integration Runner by searching the helm repository.

helm repo update

helm repo search jupiterone

Integration Operator

Replace <version> with the version you would like to install.

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: jupiterone-integration-operator

namespace: argocd

spec:

project: default

source:

repoURL: https://jupiterone.github.io/helm-charts

chart: jupiterone-integration-operator

targetRevision: <version>

destination:

namespace: jupiterone

server: https://kubernetes.default.svc

syncPolicy:

automated:

prune: true

selfHeal: true

Integration Runner

Replace the <version>, <account-id> and <environment> with your own values.

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: jupiterone-integration-runner

namespace: argocd

spec:

project: default

source:

repoURL: https://jupiterone.github.io/helm-charts

chart: jupiterone-integration-runner

targetRevision: <version>

helm:

values: |

secretAPITokenName: j1token

createSecret: false

accountID: <account-id>

jupiterOneEnvironment: <environment>

destination:

namespace: jupiterone

server: https://kubernetes.default.svc

syncPolicy:

automated:

prune: true

selfHeal: true

Integration Instance

You may also setup certain Integrations with Helm charts which are then supported in ArgoCD. Here is an example of setting up the Kubernetes Managed Integration. Replace <version> with the value from the latest chart.

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: kubernetes-managed

namespace: argocd

spec:

project: default

source:

repoURL: https://jupiterone.github.io/helm-charts

chart: kubernetes-managed

targetRevision: <version>

helm:

values: |

collectorName: runner

pollingInterval: ONE_WEEK

destination:

namespace: jupiterone

server: https://kubernetes.default.svc

syncPolicy:

automated:

prune: true

selfHeal: true

Troubleshooting

If pods are not starting or integrations are not running:

- Check pod logs:

kubectl logs -n jupiterone <pod-name> - Verify CRDs are present:

kubectl get crd | grep jupiterone - Double-check authentication credentials

- Ensure network connectivity to JupiterOne services

Known Limitations

- Unable to migrate integration jobs between collectors.

- Integration job distribution across multiple pods is still being enhanced.

- Some integrations may not be compatible with collectors.